Improving Data Centers Efficiency

with TaOx RRAM Memristors

EXECUTIVE SUMMARY

Problem

Due to the demand for AI, there is a need for faster processors, which gets challenging as we approach the limits of Moore’s Law.

Data centers consume lots of energy, which is unsustainable in the long-term future of AI.

Data centers use 40% of their energy consumption for cooling the servers, and approximately 18-22% of the total energy is spent on removing heat. As more energy is used, more heat is produced, reducing the effective use of energy.

Solution

Use TaOx RRAM memristors to process matrix multiplication in memory using analog electric mechanics for fast and energy efficient neural network calculations.

Memristors can perform calculations efficiently at 716 TOPS/W, producing less heat in data centers, leading to decreased energy spent on cooling and powering the servers.

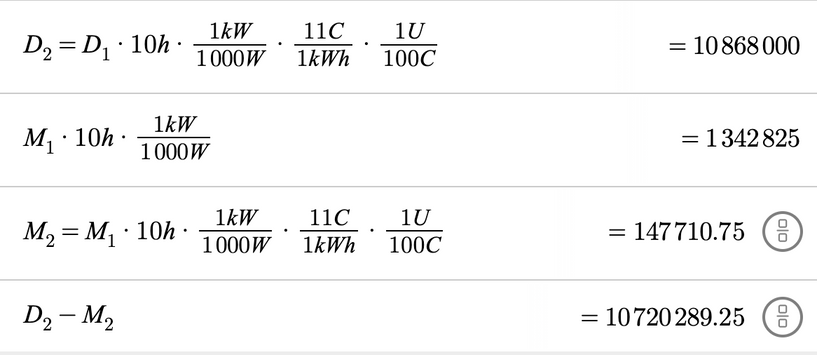

Microsoft can collaborate with Intel as an extension to their current deals to manufacture memristors for use in data centers.

Outcomes

By using TaOx memristors in data centers instead of current processing systems, we can reduce the cost of Microsoft’s data center usage by around $10.7 million USD for 10 hours of computation per net new data center. It also contributes to sustainability by reducing carbon emissions and energy consumed.

Microsoft’s data centers being optimized for efficiency allows it to be the next leader in AI development and overcome the data center bottleneck.

ENERGY CONSUMPTION IN DATACENTERS

THE PROBLEM

3

Moore’s Wall

Energy Consumption

Sustainability & Longevity

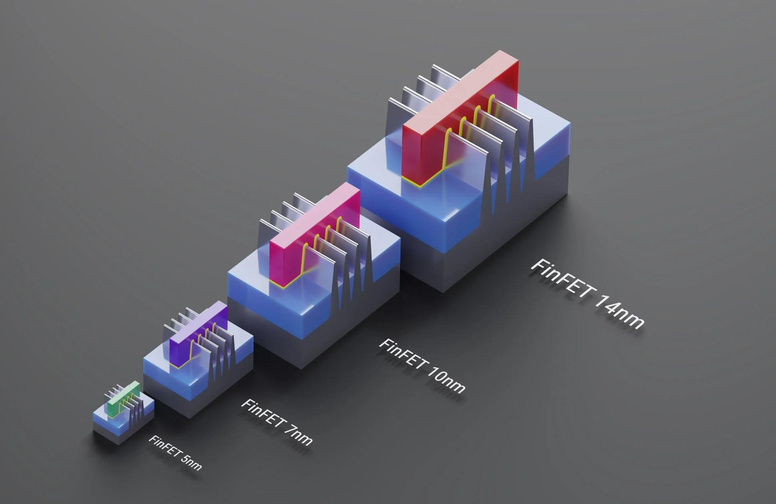

Future growth of chips efficiency and performance will be limited due to atomic size of transistors and the difficulty of further improving conventional hardware models for computation. This leads to a bottleneck where computation speed can no longer keep up with the demand for AI development.

AI acceleration only increases the necessity of energy. This energy cost will likely be the primary factor that makes or breaks further development, as neither a business nor the environment would like inefficient hardware that increases costs and heat produced.

Besides the energy consumption of computation, AI development also requires constant resources and use excess energy on cooling data centers. The resource-intensity and redundant energy usage makes the process unsustainable.

WHAT THE PROBLEM IS

Importance of Energy Efficiency

Currently, energy usage in data centers faces three main pain points that will become significant barriers to further development. Energy usage is the central cause. Data centers already consume 200 tWh of energy a year, more than several smaller nation-states and the same as 50,000 homes. This, along with creating 3.7% of global carbon emissions, goes against the values of sustainability, which only increases the threat of climate change.

At the same time, much of the energy is used inefficiently, both on cooling and computing. A good solution is to surmount these challenges by lowering energy usage while maintaining performance.

4

40%

x

Energy Usage

40% of energy usage in data centers is dedicated to cooling, while the rest is used for running computers.

x

300

Data Center Demand

Microsoft alone owns 300 data centers. Their performance and energy efficiency demand will only grow as AI development increases.

200

TWh

Sustainability Goals

Data centers create 3.7% of global carbon emissions through their energy usage and use the same energy as 50000 homes (200 tWh).

STATUS QUO

Current Computing Method

In a traditional computer, there are two main blocks: memory, and CPU or GPU. A bus or memory controller connects them to transfer data back and forth to perform computations. As transistors have continued to scale, the CPUs/GPUs have become faster and more energy efficient, increasing the importance of the limitations of the speed and energy used during data transfer back and forth.

Data communication dominates the model runtimes and energy consumption in data-intensive computations such as deep learning.

6

STATUS QUO

Sustainability of

Current Computing Method

To train 1 NLP model...

As demands for AI grow exponentially and the size and complexity of AI models increase, there will be a need for innovation and more energy-efficient AI computing. Instead of spending time and energy transferring data back and forth, what if we could design a system that eliminated this data movement and performed the functions of both the memory and the CPU/GPU? This would greatly increase the speed and performance of training and running AI models which use data-intensive computations.

Equivalent carbon footprint of 5 cars

and takes 1 week to train in a data center

TaOx RRAM Memristors

- Memristor Theory

- TaOx

- Challenges

- Comparisons

- Saving Billions in Cost

Memristors in a Nutshell

1

2

3

4

Memristors are resistors that can store data in the form of resistive states.

Memristors are able to maintain their states (and data) without extra power.

Memristors are analog devices due to relying on resistive states, and are thus not binary.

Memristors can be more accurate the larger the difference between their high and low resistive states.

THE SOLUTION

What are RRAM Memristors?

8

Memristors are electrical components, the short form of “memory resistor”. An RRAM memristor stands for resistive random access memory memristor. They can store data by “remembering” the charge that they experience through its state. As an analog device, a memristor can change its resistive state when powered by a specific voltage. Memristors are built using metal oxides that have a clear differentiation between their high resistive state (HRS) and low resistive state (LRS).

Most importantly, an RRAM memristor is a non-volatile memory device, meaning it does not consume energy to maintain data.

Conventional computing always needs power to maintain stored memory, which is a redundancy that memristors eliminate.

THE SOLUTION

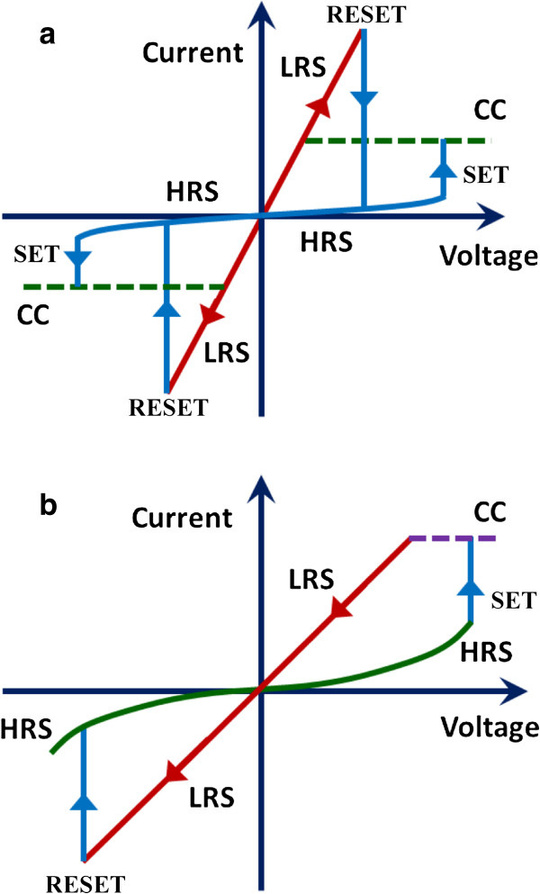

How do RRAM Memristors Work?

Resistive States

Memristors are usually metal oxides with changing resistivity.

The resistance values of the memristors can be set or reset to a high resistive state (HRS) or low resistive state (LRS) by applying a specific voltage. When the resistance values are changed, the memristors “remember” their resistive state by storing it until another change. This state is the information that memristors store for data or computation. The larger the difference between HRS and LRS, the more accurate computations are. This is because higher HRS/LRS means differentiation between “bits” is easier.

Similar to a neuron’s action potential, energy is used only when once a stimulus is applied. This allows memristors to not use energy passively when simply storing data, unlike other chips. The power consumption of memristors is significantly lower than most chips due to this.

9

THE SOLUTION

How do RRAM Memristors Work?

Crossbar Structure

Memristors are usually arranged in crossbar structures. These use vector matrix multiplication, or multiplying many different numbers and adding them up, which is at the heart of every deep neural network.

Crossbars are 3D structures, where memristors are sandwiched by 2 perpendicular wires. By inputting a specific voltage (V) and running it through the memristor, the conductance (G) and voltage (V) multiply to output current (I).

All the individual currents are then added together to complete the matrix multiplication for computation.

10

THE SOLUTION IN DETAIL

TaOx RRAM Memristors

We propose tantalum oxide memristors as the clear solution mechanism for computational speed, scalability, energy efficiency, and accuracy.

11

0.1-10 fJ

x

x

2 nm

716 TOPS/W

44800

First, all RRAM memristors are quite fast computationally: many use only 0.1-10 femtojoules (fJ = 10⁻¹⁵ J) of switching energy. In other words, memristors only need to consume energy on a scale of 10⁻¹² joules to move current from one circuit to another. Memristors also only use 1-10 fJ per computation.

Second, all memristors are highly compact in size (~2 nanometers) and take up less room for the same computational power (~41% space of most Field Programmable Gate Arrays), making them easy to scale.

Third, TaOx memristors have exceedingly large tera operations per second per watt value (TOPS/W); meaning they have high energy efficiency. For comparison, most computers operate at around 5 to 10 TOPS/W, while TaOx SRAM memristors can operate at 716 TOPS/W. RRAM memristors may have different numbers due to their non-volatile nature, but TaOx consistently has a high value.

Lastly, TaOx memristors have high accuracy, especially for an analog device that does not have the simplicity of binary calculations. This is due to their high HRS-LRS ratio of around 44800, which means differentiating between HRS and LRS is easier and calculations are more precise. TaOx memristors are also highly stable.

THE SOLUTION: CONTINUED

Comparing the Solution

12

Metric

Status-Quo System

Solution System (TaOx)

Energy Efficiency (TOPS/W)

HRS/LRS (Memristors)

Power Used for Memory

Accuracy

(AI)

Space Required for Equal Performance

Cost of running a data center for 10 hours (only computational cost)

5.654 (Nvidia H100 GPU)

5,000 (Ti/SiO2 RRAM)

~50% of total

83.55% (SNN)

100% (Traditional FPGA relative to itself)

10.9 million USD

716 (TaOx SRAM, RRAM may differ slightly)

44,800 (TaOx RRAM)

~0% of total

82% (SNN/Memristor)

41% (Hybrid-Memristor FPGA)

0.148 million USD

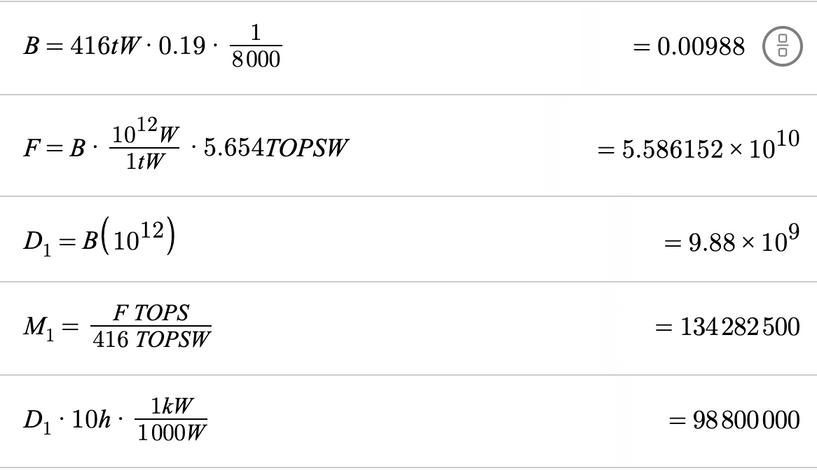

THE SOLUTION: COST

Cost Reduction for Data Center Usage

13

988,000

kW

x

416 tW of power is used by all data centers globally. Around 19% of that is used on computing (ignoring memory, cooling, etc). If we assume all data centers use equal power, one data center uses 416tW * 0.19 * 1/8,000 = 988,000 kW of power.

134

kW

x

988 kW of power using Nvidia’s H100 5.654 TOPS/W achieves 5.586 * 10¹⁰ TOPS. The same TOPS is divided by 716 (TaOx memristor TOPS/W ) to get watts used by memristors = 134 kW of power.

$10.9

million

Now, using the conventional power of 9.88 kW for 10 hours comes to approximately 98,800,000 kWh of energy. Globally, the average cost of electrical energy is around 11 cents per kWh. So, this would cost 98,800,000 * 11/100 = $10,868,000 USD, or 10.9 million dollars.

$0.148

million

Using memristors, the power needed is 134 kW, which results in 1342825 kWh of energy. Multiplying the electricity rate, the total cost comes to $147,710.75 USD, or 0.148 million dollars.

$10.7

million

For every 10 hours of computation, Microsoft effectively saves $10.7 million USD for each new data center built with TaOx memristors instead of conventional servers (not including other cost savings, such as reduced cooling).

14

THE SOLUTION: COST

Cost Reduction for Data Center Usage (Calculations)

Variables

B = One Data Center’s Power Usage on Computing

F = TOPS of power using NVIDIA

D1 = B in W

M1 = Power Usage using Memristor’s TOPS/W (W)

D2 = Cost of D1 Power (USD)

M2 = Cost of M1 Power (USD)

Units

U = USD

C = cent (USD)

TOPS = tera operations per second

TOPSW = tera operations per second per watt

W = watt

h = hours

THE SOLUTION: COST

Cost Reduction for Data Center Cooling

In addition to the savings from reduced computation power, memristors also reduce the amount of energy spent on cooling.

15

1.5 PUE

x

To do an estimate, most data centers have a power usage effectiveness (PUE) value of around 1.58, at least a few years ago. If we assume the average data center’s PUE can be reduced to around 1.5, that means another 50% of the energy spent on computation is spent on cooling.

$16.3 million

x

Thus, the monetary cost of powering in data centers (10.9 million USD) is multiplied by 1.5, resulting in around $16,350,000.

$0.22

million

The same is done for the cost of a memristor-based data center (0.148 million USD), which comes to approximately $222,000.

$16.1 million

The savings for running a memristor-based data center for 10 hours compared to conventional means is now even higher at around $16,100,000 USD.

1

A primary challenge that holds back memristor application is the cost. Currently, no industry standards exist for memristor prices and memristors mostly remain as a research interest.

The base material of our recommended memristor (Ta2O5) costs around $30,000 USD for a metric ton, which is not unreasonably high.

2

Present infrastructure does not exist for memristors in data centers, making the actual implementation of the technology difficult.

Considering how memristor integration has a high ROI, the upfront investment to develop this infrastructure should not deter Microsoft. Also, Intel has previously with memristors, so cooperation could help accelerate the building process.

3

Currently, memristors (like all analog devices) are less precise than binary/digital systems are.

As AI is inherently statistical, minor imprecision in calculations is less relevant than traditional computing. TaOx memristors have such a high HRS-LRS ratio that differentiation is easier and calculations are closer to binary.

Future Outlook

Memristor Market Growth

Major

Manufacturers

- Samsung Electronics

- AMD Inc

- IBM Corporation

- Hewlett-Packard

- Sony Corporation

- Intel Corporation

With the backing of large manufacturers, industry analysts have projected market growth to range anywhere from 37.06% CAGR to 69.9% CAGR. This growth indicates that competitors are investing in this technology to improve their systems. If Microsoft wants to remain competitive, they must begin acting and investing money into this research to implement it into their own systems, as the integration of this technology has many considerations to work out.

17

$8228 mil

$282.5 mil

18

Implementation in Data Centers

- Case Studies

- Considerations

- Future Outlook and Roadmap

CASE STUDY

NeuroPack and ArC One

1971

x

2008

x

2022

x

Memristors theorized

By Leon Chua

Memristors demonstrated

ArC One simulator built by HP Labs

AI run on simulated memristor

SNN run on NeuroPack, based on ArC One

Researchers developed NeuroPack, an algorithm-level Python-based simulator for memristor-empowered neuro-inspired computing.

They ran a spiking neural network (SNN) to classify handwritten digits from the MNIST database and achieved an 82% accuracy, comparable to the 83.55% accuracy achieved by a similar SNN model without memristors.

The memristors act as synapses, the connections between neurons in the network, which facilitate the learning process by adjusting their resistance states, leading to changes in the weights of the connections.

Running artificial neural networks (ANNs) on simulated memristors is significant since it proves that memristors are capable of training and running ANNs which are similar in accuracy to models trained on GPUs.

19

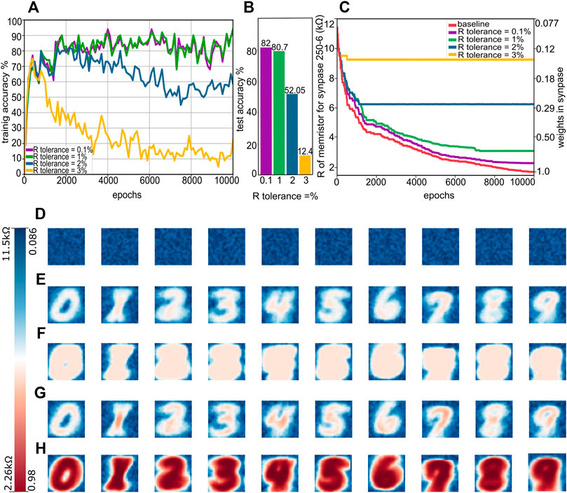

[A] The graph shows the improvement in the training epochs for different R tolerance values, which are used to determine when the memristor's resistive state has sufficiently converged to the target value during the weight update process. The smaller the R tolerance, the more precise and computationally intensive. From the research, with an R tolerance value of 1%, the accuracy was not significantly affected and has the best balance.

[B] The test accuracy of the model, trained with different R tolerance values. The models trained on R tolerance values of 0.1% and 1% have similar accuracies, and past 1%, the accuracy drastically drops.

[C] As the neural network is trained, the weights of the synapses are adjusted. The graph shows how a difference in R tolerance directly correlates to the tolerance at which the weights are tuned.

CASE STUDY

BNN Powered by a

Small Solar Cell

2024

x

AI run on memristor module

BNN run using near-memory computing

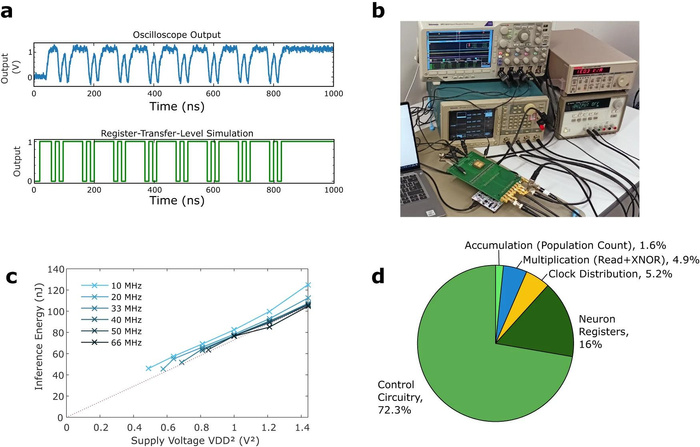

Researchers developed a memristor-based binarized neural network (BNN) with near-memory computing powered by a miniaturized solar cell.

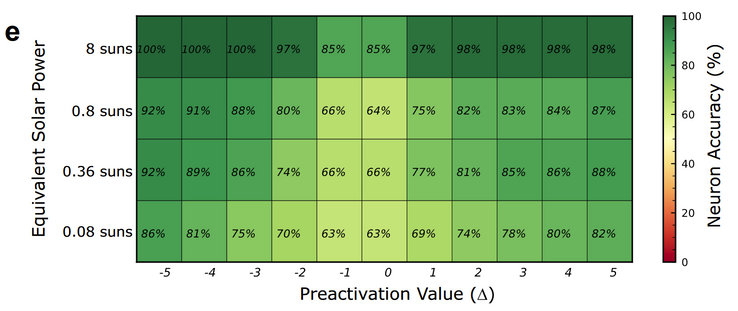

This study focused on energy-efficient computing with low and unstable power input. When powered by 1.23 volts and 10 milliamps, the output neuron activations remained at very high levels of accuracy. The lowest the system was powered at was 0.7 volts and 0.1 milliamps (45 nJ), and the system remained functional, still with good accuracy but increased error rates.

When evaluating the classification accuracy of their hardware, they noticed only a 0.7% drop in accuracy for AI models recognizing handwritten digits from the MNIST dataset between the power output from 8 suns (1.23V) and 0.08 suns (0.7V), and a reduction of accuracy from 86.6% to 73.4% for the CIFAR-10 task.

This study proves how running artificial neuron networks (ANNs) with memristors can maintain high accuracy while operating at much lower energy consumption than current methods.

20

[e] The graph shows the mean measured accuracy of the output neuron activations for each preactivation value with different power consumption. The neurons maintain their accuracy even in low power output from the solar cell.

[b] The physical circuit board with memristors and a miniaturized solar cell.

INTEGRATION

Integration Considerations | Partnerships

21

Startups

The first course of action that Microsoft could take immediately is to search for and acquire startup companies that are currently innovating in the field. Analog computing is an exciting and growing industry, which smaller companies take advantage of easily. Besides the case studies earlier, many more work on memristors and neuromorphic computing systems could easily reach targets with more funding. By purchasing startups, Microsoft could gain access to emerging memristor technology early and accelerate research and development. This also allows Microsoft to test the compatibility of startup memristor computing with Azure. As the case studies show, memristor-AI software should be possible, but startups help verify it. Azure is well-placed for this because it was tested with neuromorphic computing in the past.

The second course of action is to secure Intel’s production of memristor chips. Intel’s 15 billion deal with Microsoft already produced custom-made chips and silicon. Also, Intel has worked on memristor projects before (such as Loihi 2, a neurmorphic computing project which uses memristor technology to run), and is therefore a prime candidate for Microsoft’s future in memristor development. Conveniently, Intel has the resources to custom-make memristors (perhaps surpassing current TaOx RRAM models) that increase the efficiency of computation beyond all other solutions. Even achieving the TaOx record TOPS/W could save billions of revenue, as demonstrated earlier with an example that only considers 10 hours worth of computation.

DEVELOPMENT AND IMPLEMENTATION EXECUTION

Future Outlook and Roadmap

22

Initiate the process by narrowing the focus to regions where Microsoft’s integration challenges are most pronounced. Scale the application of the memristor to high-load data centers for real-world testing. An ideal location to begin rolling out this technology would be the United States, given it is the headquarters of a lot of emerging and mature AI companies that will use these data centers, reducing the ROI timeline. Data center support is also common in the U.S. relative to less developed or innovation-focused countries.

Late 2024

2026

Early-Late 2025

Purchasing Startups

Purchasing small startups, such as Extropic and Intrinsic Semi, will decrease the overall R&D time necessary and would allow Microsoft to focus more on the integration strategy within both their existing and future data centers. At the same time, Microsoft can test the efficacy of memristor technology in Azure, for the aforementioned reasons of both Azure’s previous nerumorphic computing and memristor’s probable viability in SNNs.

Partnering with Intel

As a partnership already exists between Microsoft and Intel, the negotiations to manufacture memristors should take less time than to begin anew and manufacture in-house for Microsoft. This allows Microsoft to begin creating and implementing larger memristor structures (crossbar computers, neuromorphic, etc.) into data centers to optimize efficiency. In other words, it’s the fastest method to begin saving massive costs and use less energy.

Manufacturing

Once a design is developed in-house, Intel can begin manufacturing. Since Intel had manufactured memristors in the past, they should already have existing infrastructure to support the process.

2026-2027

Integration

Once the chips are manufactured, Microsoft can begin integrating them into the systems in their upcoming data centers.

23

On a more personal note,

We would like to give our sincerest gratitude for allowing us to work on Microsoft’s sustainability and innovation problem.

We hope that our collective efforts have brought forth fresh perspectives and innovative ideas.

If you have any questions or concerns, please feel free to reach out to any of us.

Best regards,

Chloe, Daniel, and Prajwal